In this blog post, I’d like to share my idea for the final project, which would be a grant proposal.

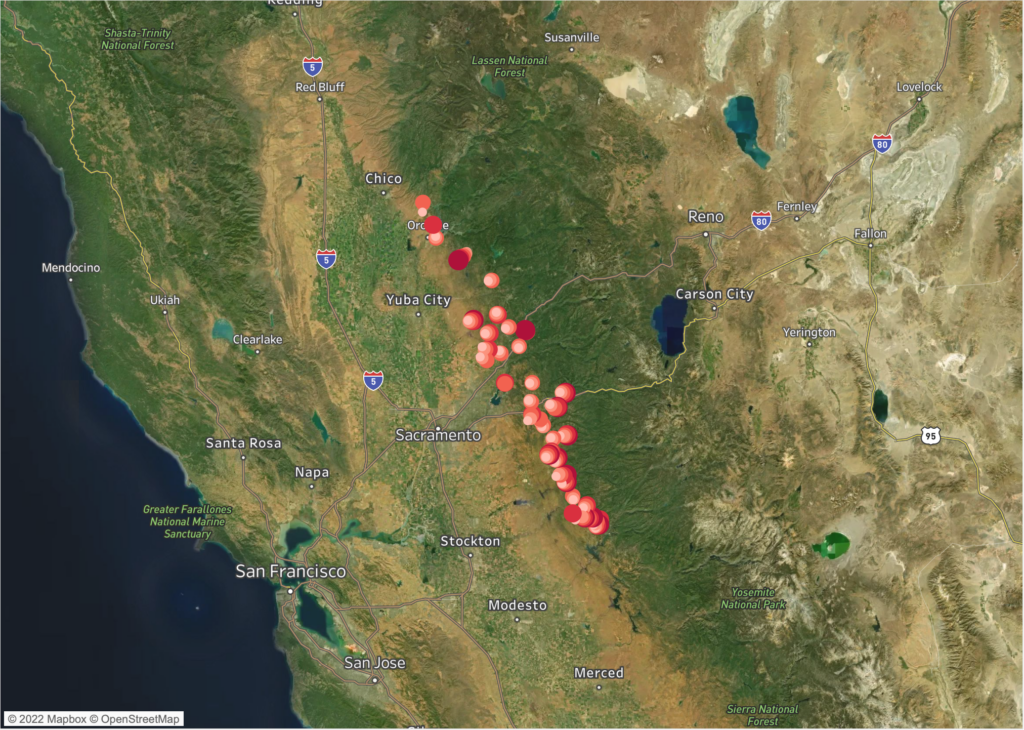

Name: Renewable Energy Consumption Forecast Map (still WIP!)

Product format: Website with an interactive map

Abstract: The grant proposal for building a web app hosting a dynamic and interactive map that shows energy consumption forecasts. The website will also include basic calculations and simulations to help consumers estimate energy usage, cost, and credits.

Context: One of the key challenges for transitioning from fossil fuel to renewable energy is managing the energy grid (as supply and demand fluctuate). Fossil fuel supply can be very predictable (you can burn as much as you need). Unlike fossil fuel, Renewable energy (along with the storage challenge), there’s kind of a “cap” on production. The only way to increase production is to have more hardware such as solar panels (which we must remember they not that sustainable to begin with, it also doesn’t make sense to have 100 additional solar panels just to cover the peak usage of 2 summer hot days).

Problem Space: Managing supply and demand for renewable energy

Why does this matter: Managing supply and demand in an agile manner is obviously necessary for fossil fuel to the renewable energy transition. However, doing it in a socially-just manner could be pivotal in rebalancing power between the energy companies and the consumer. Considering Environmental and Climate justice tends to distribute both advantages and disadvantages unfairly to marginalized communities. This is important to keep in mind that we should not simply aim to transition from fossil fuel to renewable energy, we also leverage this moment to address environmental and climate injustices. After all, it will be unfortunate if we do successfully transition away from fossil fuel, but all the energy companies are still in a position of power that would allow them to place profit before people.

Current solution: Consumer-facing levers for demand management: Increase pricing to reduce demand (aka peak hour surge pricing)

Proposed additional solution: Incentivizing households to reduce electricity usage at peak times when demand is at its highest, by offering energy credits or cash rewards. The rationale behind this idea is that households should get “rewarded” for saving energy during these peak hours and thus make them more available for the rest of the grid.

What do we need to make it happen: Transparency of energy usage as a foundation of information sharing and a place to tackle market dynamics where incentives can be mobilized –> a website with energy consumption and reward calculator.